Cybersecurity audits have traditionally focused on identifying technical vulnerabilities, ensuring compliance, and reviewing policy implementation. However, a significant majority of cyberattacks stem from human error or manipulation, making the human element a critical area of concern. This paper examines the psychological and behavioral factors that influence cybersecurity outcomes, highlighting how attackers exploit human tendencies through social engineering. It introduces the concept of the “7 Vulnerabilities of the Human Operating System,” a framework inspired by behavioral psychology, which outlines how threat actors manipulate trust, authority, consistency, and social influence. Through real-world case studies and strategic recommendations, the paper proposes methods to integrate human-centrist evaluations into cybersecurity audit frameworks, emphasizing the need for training, awareness, and cultural transformation. Recognizing and auditing the human element is vital for enhancing organizational cyber resilience.

Introduction

Cybersecurity audits serve as critical tools for evaluating an organization’s defenses against evolving digital threats. Traditionally, these audits prioritize technical controls such as firewalls, access management systems, vulnerability scanners, and compliance with frameworks like ISO 27001 or NIST. While these components are essential, recent studies and incident analyses indicate that human behavior continues to be the most exploited vector in successful cyberattacks.

Statistics reveal that a significant majority of breaches—ranging between 70% to 90%—involve some form of human error or manipulation. This includes phishing, credential compromise, poor security hygiene, and inadvertent data disclosures. The human factor, long regarded as the weakest link in the cybersecurity chain, is often underrepresented in formal audit processes.

This paper explores the gap between technical assurance and human-centrist risk. It advocates for integrating behavioral analysis, psychological evaluation, and social engineering assessments into cybersecurity audits. By understanding how cognitive biases and social triggers are exploited, organizations can better identify vulnerabilities that technical tools alone may not detect.

In particular, this work introduces the “7 Vulnerabilities of the Human OS,” a model based on influence psychology, which explains how attackers manipulate individuals within organizations. By embedding this model into audit methodology, the paper proposes a path toward more holistic, resilient cybersecurity postures.

Background

Today’s Auditing

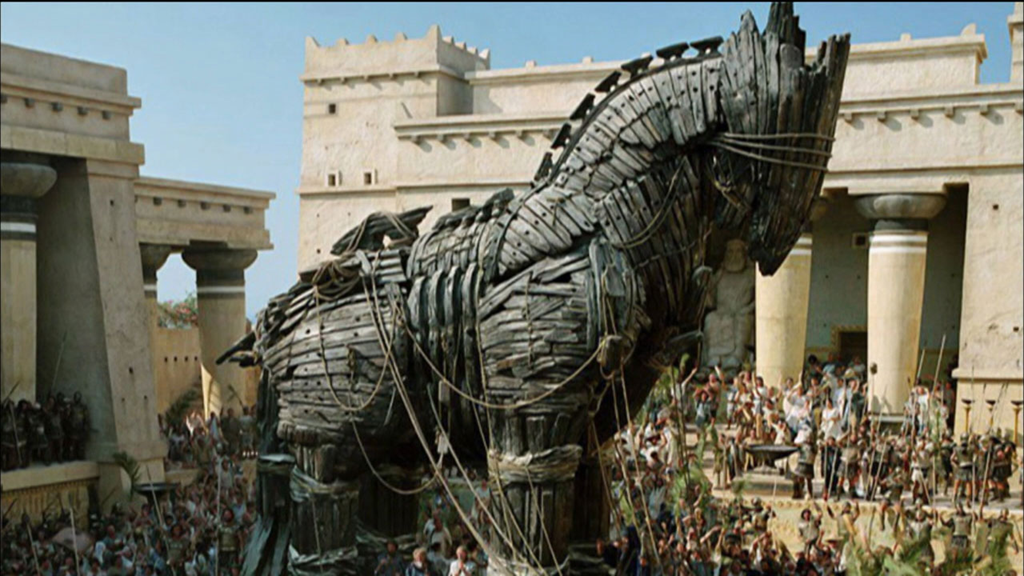

The concept of exploiting human behavior in cybersecurity is not new. Historical references, such as the story of the Trojan Horse in Homer’s The Odyssey, illustrate how deception and trust exploitation have long been effective tools in breaching defenses. In the digital age, this principle has only evolved—attackers now use sophisticated psychological techniques and publicly available information to manipulate individuals and gain unauthorized access.

Despite advances in security technology, human elements such as error, oversight, and trust remain difficult to quantify and mitigate. Social engineering, in particular, has emerged as a preferred attack method due to its effectiveness and minimal technical effort. Phishing emails, business email compromise (BEC), pretexting, and baiting are all examples where adversaries bypass security systems by targeting people instead of infrastructure.

Modern cybersecurity audits, however, tend to focus heavily on technical configurations and compliance checklists, often overlooking behavioral risk indicators. This imbalance creates a significant blind spot in audit outcomes. Understanding the evolution and techniques of social engineering is crucial to recognizing the importance of human-centrist auditing.

Several high-profile incidents underscore this vulnerability. The 2011 RSA breach, the 2019 Toyota BEC fraud, and the 2020 Twitter Bitcoin scam were all enabled by manipulation of individuals rather than direct exploitation of systems. These cases highlight the urgency of incorporating human factor analysis into security auditing frameworks to improve organizational resilience.

Our Past

The exploitation of human trust for strategic advantage has deep historical roots. One of the earliest and most cited examples is the tale of the Trojan Horse from Homer’s The Odyssey. According to legend, after years of futile attempts to breach the walls of Troy, the Greeks devised a plan centered not on force, but on deception. They constructed a massive wooden horse, hid elite soldiers inside, and pretended to retreat from the war. The Trojans, believing the horse to be a peace offering and a symbol of Greek surrender, brought it into their city. That night, while the city slept, the hidden soldiers emerged and opened the gates for the returning Greek army—leading to Troy’s downfall. This story remains a timeless illustration of how manipulation of trust can defeat even the most fortified defenses.

Similarly, in ancient India, the strategist and philosopher Chanakya (also known as Kautilya) laid the foundation for one of the world’s earliest documented intelligence systems. In his seminal work, Arthashastra, Chanakya emphasized the importance of espionage, psychological manipulation, and surveillance for national security and governance. He recognized that an empire’s internal stability and external strength depended significantly on understanding human behavior, identifying traitors, and influencing both allies and adversaries through strategic means. Chanakya’s spy networks were instrumental in protecting the Mauryan Empire and maintaining political order.

Both the Trojan deception and Chanakya’s intelligence philosophy highlight a timeless truth: human vulnerability is a critical security factor. These historical precedents validate the need for modern cybersecurity audits to consider human behavior as a primary dimension of risk analysis.

The 7 Vulnerabilities of the Human OS

While organizations invest heavily in firewalls, intrusion detection systems, and encryption, attackers often bypass these defenses by targeting the human mind. Drawing from behavioral psychology and the science of influence, social engineers exploit predictable cognitive patterns to manipulate individuals. These techniques are consistent across many attacks and can be categorized into seven core principles—collectively referred to here as the “7 Vulnerabilities of the Human Operating System (OS).”

- Reciprocity

Humans are wired to return favors. Attackers often exploit this by giving something—such as free resources, helpful information, or fake support—before making a malicious request. Victims feel compelled to comply, even if the original “favor” was unsolicited or manipulative.

2. Scarcity

People are more likely to act when they perceive that something is limited. Attackers generate urgency by sending messages with subject lines like “Account will be locked in 1 hour” or “Limited-time update required,” prompting impulsive actions without scrutiny.

3. Authority

Individuals tend to comply with figures perceived to have authority. Cyber criminals impersonate IT administrators, CEOs, or government officials to coerce victims into sharing credentials or executing unauthorized tasks.

4. Liking

We are more likely to trust and follow people we like. Attackers build rapport through shared interests, fake personas, or flattery—often leveraging social media profiles to construct believable and friendly identities.

5. Commitment and Consistency

People prefer to act consistently with their past behavior. Once a victim agrees to a small request (e.g., clicking a harmless link), they are more likely to comply with larger, more dangerous actions later. This principle is commonly used in multi-stage phishing campaigns.

6. Consensus (Social Proof)

When unsure, people look to the behavior of others. Attackers fabricate urgency by stating that “many employees have already updated their settings,” creating a false sense of norm that pushes victims to act.

7. Unity

This relatively newer principle taps into shared identity—national, corporate, religious, or social. Messages that imply “we’re all in this together” or come from insider groups have greater persuasive power, making employees more likely to trust the source.

These vulnerabilities are not flaws in technology, but in human cognition—and they are universally exploitable. Recognizing and assessing these in the context of cybersecurity audits can dramatically improve an organization’s preparedness against social engineering attacks.

How to Exploit it?

Real-world cyberattacks often mirror the psychological principles outlined in the 7 Vulnerabilities of the Human OS. The following incidents demonstrate how attackers have successfully exploited each of these behavioral patterns:

Reciprocity — 2013 Target Data Breach

Attackers gained access to Target’s network through a third-party HVAC vendor. The vendor had previously interacted with Target’s systems for legitimate purposes, creating a trusting, reciprocal relationship. Once malware was embedded in legitimate communications, Target’s employees unknowingly opened the door—demonstrating how prior helpful interactions lower skepticism.

Scarcity — 2020 COVID-19 Phishing Campaigns

During the early months of the pandemic, attackers sent phishing emails posing as health organizations, offering “limited access” to vaccines, masks, or safety information. The perceived scarcity and urgency drove recipients to click on malicious links, revealing credentials or downloading malware.

Authority — 2016 Google and Facebook Invoice Scam

An attacker impersonated a major hardware supplier and sent fake invoices to Google and Facebook’s finance departments, citing executive-level purchase orders. Trusting the authority implied in the documentation, employees transferred over $100 million before realizing the deception.

Liking — 2020 Twitter Bitcoin Scam

Hackers compromised Twitter’s internal tools and used verified celebrity accounts to promote a Bitcoin giveaway scam. Users were more inclined to trust the message because it came from public figures they admired—leveraging the principle of liking.

Commitment & Consistency — 2013 Yahoo Spear Phishing

Attackers initially sent benign emails that established a pattern of harmless interaction with Yahoo employees. Later emails in the same thread included malicious attachments. Because recipients had previously interacted with the sender without issue, they were more likely to continue engaging—falling prey to the consistency trap.

Consensus (Social Proof) — 2022 WhatsApp OTP Scam in India

Attackers messaged users from hacked accounts, requesting OTPs for login verification. The victims, seeing messages come from friends or family (others they trust and follow), assumed legitimacy. The attacker used social proof—“if someone I trust is doing it, I can too.”

Unity — 2021 SolarWinds Hack (Initial Infection Vector)

The attackers embedded malicious code into SolarWinds software updates, which were then delivered to thousands of trusted customers, including U.S. government agencies. The assumption that an internal, signed update from a known vendor is trustworthy reflects the unity bias—trust in a shared ecosystem or organization.

These examples affirm that attackers do not need sophisticated tools to breach systems. Instead, a deep understanding of human psychology is often sufficient to exploit trust, authority, and cognitive shortcuts at scale.

Movie References

Cinema has long portrayed the art of deception, manipulation, and psychological exploitation—core techniques in social engineering. These movies not only entertain but also provide insightful, dramatized representations of how human vulnerabilities are exploited in real life.

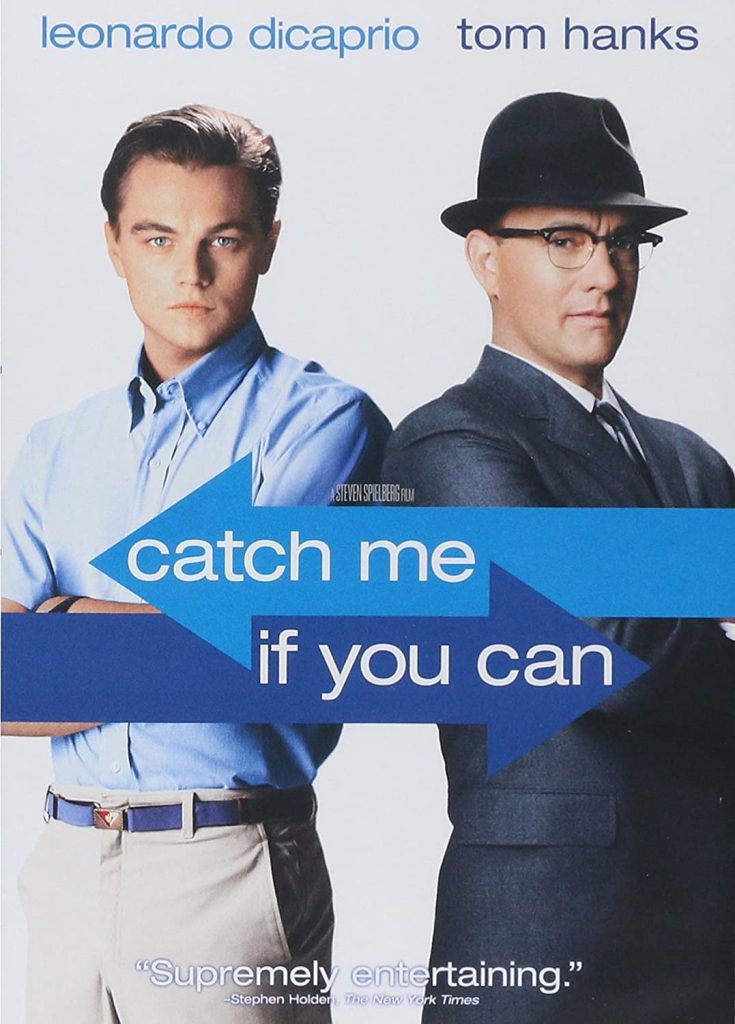

Catch Me If You Can (2002)

Based on the true story of Frank Abagnale Jr., this film is a masterclass in social engineering. Abagnale impersonates a pilot, doctor, and lawyer by exploiting authority, trust, and appearance. He manipulates individuals and institutions using charm, confidence, and fake credentials—perfect illustrations of authority, liking, and unity.

Sneakers (1992)

This cybersecurity-themed heist film shows a team of experts using both technical skills and social engineering to break into secure systems. In one scene, they extract sensitive data by posing as service technicians—demonstrating how liking and reciprocity are used to disarm suspicion.

Mr. Robot (TV Series, 2015–2019)

While primarily technical, Mr. Robot frequently explores psychological exploitation. The protagonist, Elliot, uses phishing emails, USB drops, and voice manipulation to bypass digital security through human vectors. It vividly portrays scarcity, consensus, and commitment tactics.

Now You See Me (2013)

This heist thriller centers around illusionists who use misdirection and behavioral manipulation to steal and escape. Though not a traditional cybersecurity film, it heavily features social proof, scarcity, and unity as means to control audience perception and law enforcement response.

The Italian Job (2003)

While known for its car chases, this film also includes social engineering scenes—such as gaining access to secure buildings using impersonation and pretexting. The planning scenes reflect real-world tactics used in red teaming and physical security tests.

These portrayals, though dramatized, underscore the core principle of social engineering: exploiting the human operating system, not the digital one. They also highlight the importance of training and awareness in recognizing such threats in both personal and professional settings.

Integrating Human Factors into Audit Frameworks

Traditional cybersecurity audits emphasize system configurations, vulnerability management, access controls, and compliance with security policies. However, these methods often overlook behavioral vulnerabilities, which are frequently the entry points for sophisticated attacks. To address this gap, modern audit frameworks must integrate human factor assessments as a core component of cybersecurity evaluation.

Auditing the human element requires a shift in mindset—from static control checks to dynamic behavioral risk evaluation. This can be achieved by embedding the following practices into existing audit methodologies:

• Phishing Simulations

Regular, controlled phishing campaigns help evaluate employee awareness and susceptibility. These simulations also provide valuable data on response time, reporting behavior, and departmental weaknesses.

• Social Engineering Red Team Assessments

Red teams should simulate real-world pretexting, impersonation, and baiting attacks. Evaluating how individuals respond to unauthorized access attempts or deceptive queries provides insights into procedural and psychological gaps.

• Security Awareness and Training Review

Audit processes should include the review of awareness programs—frequency, effectiveness, and relevance to current threats. Assessment of training completion rates and retention through knowledge tests offers measurable indicators of human readiness.

• Behavioral Risk Profiling

Behavioral analytics tools can help identify anomalies in user behavior, such as unusual login times, access patterns, or data transfers. These indicators assist in uncovering insider threats or compromised accounts.

• Human-Centric Metrics in Risk Scoring

Audit reports should introduce human factor metrics—such as social engineering susceptibility rate, policy adherence variance, and training impact scores—into the overall security posture assessment.

• Cultural Evaluation

Cybersecurity culture plays a significant role in how policies are practiced. Interviews, anonymous surveys, and focus groups can help auditors gauge whether employees view cybersecurity as a shared responsibility or as a checkbox task.

By integrating these human-focused dimensions, auditors gain a comprehensive view of an organization’s security posture—one that includes not just what systems are doing, but how people are behaving. This holistic approach is essential to building a truly resilient cybersecurity ecosystem.

What is not Social Engineering?

While social engineering relies on psychological manipulation to deceive individuals, it is often confused with other forms of cybersecurity incidents that do not involve direct human interaction or influence. To strengthen audit clarity and training effectiveness, it is important to distinguish between social engineering and other non-related incidents.

• Technical Exploits

Attacks such as buffer overflows, SQL injection, cross-site scripting (XSS), or zero-day exploits target vulnerabilities in software, not people. These attacks are executed without needing to trick a human user and are often automated or executed through direct code manipulation.

• Human Error (Without Manipulation)

Not all mistakes are caused by social engineering. For example, accidentally sending a confidential email to the wrong recipient, misconfiguring access controls, or forgetting to log out of a terminal are human errors—but they are not examples of social engineering unless manipulation was involved.

• Malware Infection via Drive-by Downloads

If a user unknowingly downloads malware from a compromised website without being coaxed or persuaded to do so, it is a technical exploit. However, if the user is tricked into clicking the link through a crafted email or message, then it crosses into social engineering territory.

• Insider Threats (Without Deception)

Employees who intentionally leak data or misuse access privileges for personal gain or sabotage, without being manipulated, represent malicious insiders—not social engineering victims. Social engineering focuses on external actors influencing insiders, not insiders acting on their own.

• Credential Sharing Due to Convenience

When employees share passwords or use insecure practices for the sake of convenience or speed, without being prompted or tricked, this reflects poor security culture or training—not an active social engineering attack.

Understanding these distinctions helps organizations focus their defensive strategies appropriately. Social engineering audits should specifically assess susceptibility to influence, deception, and manipulation, rather than conflating them with general human or system failures.

Training and Culture Building

Technology alone cannot prevent social engineering attacks. A resilient defense posture depends on a security-aware workforce that consistently practices safe behaviors and internalizes cybersecurity as a shared responsibility. Building this culture requires sustained investment in education, engagement, and leadership-driven initiatives.

• Beyond One-Time Training

Annual cybersecurity training sessions are insufficient in a threat landscape that evolves monthly. Organizations must adopt continuous learning models that incorporate microlearning, scenario-based simulations, and real-time updates on emerging tactics. This helps employees retain knowledge and apply it under pressure.

• Simulated Social Engineering Exercises

Phishing simulations, vishing calls, baiting tests, and physical social engineering scenarios should be regularly conducted to evaluate preparedness. When employees fall for simulated attacks, immediate feedback and follow-up learning create teachable moments without punitive consequences.

• Gamification and Role-Specific Content

Interactive, gamified training modules increase participation and retention. Additionally, content should be tailored to job roles—what a sysadmin needs to know differs from what’s relevant to a marketing executive or finance clerk. Customization makes training feel relevant, not generic.

• Leadership and Tone from the Top

A strong security culture starts with leadership. Executives must model good security behaviour, support security teams visibly, and integrate cybersecurity priorities into organizational strategy. Employees are more likely to care about security when they see it prioritized from the top.

• Security as a Daily Habit

Security must shift from being viewed as a checklist to a day-to-day habit. Posting visual reminders, using internal communications to share phishing trends, recognizing vigilant employees, and integrating security into onboarding processes are ways to reinforce this mindset.

• Measuring Cultural Maturity

Organizations can use surveys, quizzes, and incident response data to gauge security culture maturity over time. Metrics such as reporting rates, response time to simulations, and training completion can inform both audit findings and program improvements.

Ultimately, technical controls form the perimeter, but culture shapes the core. A well-informed, sceptical, and empowered workforce serves as the best frontline defence against social engineering threats.

Challenges and Limitations

While integrating human factors into cybersecurity audits offers significant advantages, the process is not without challenges. Unlike technical controls that can be measured, logged, and standardized, human behavior is dynamic, context-dependent, and often resistant to quantification. These characteristics introduce several limitations:

• Subjectivity in Evaluation

Unlike firewall rules or password policies, human behavior lacks clear thresholds or benchmarks. Auditors must rely on surveys, interviews, or behavioral observations, which may be subjective or inconsistent. This makes it difficult to establish uniform standards for human-centrist audit components.

• Resistance and Fear of Testing

Employees may view simulated phishing or social engineering tests as entrapment or punishment, especially if prior training was inadequate. Poorly communicated simulations can damage trust and morale, making future engagements more difficult.

• Balancing Privacy and Oversight

Monitoring employee behavior for security risks—such as behavioral analytics or anomaly detection—can raise privacy concerns. Organizations must tread carefully to ensure that audit activities respect ethical boundaries and comply with privacy regulations like the GDPR.

• Cultural and Geographic Diversity

Security behavior is often influenced by local culture, communication styles, and organizational hierarchies. What works for one region or office may not apply globally. Designing culturally sensitive, globally scalable training and audit processes is a significant challenge.

• Lack of Skilled Auditors

Evaluating social engineering preparedness requires interdisciplinary knowledge—cybersecurity, behavioral psychology, and risk assessment. Many auditors are highly skilled in technical domains but may lack training in human behavior analysis.

• Changing Attack Techniques

Social engineering tactics evolve rapidly. New phishing formats, deepfake impersonations, and AI-driven scams can outpace the training and simulation modules organizations have in place. Audit criteria must be updated frequently to reflect the latest threat landscape.

• Measurement Fatigue

Over-reliance on quizzes, simulations, and compliance tracking can lead to employee disengagement, especially when they are overused or poorly designed. This can reduce the effectiveness of even the best-intentioned awareness programs.

Despite these limitations, the benefits of integrating human factors into audits outweigh the drawbacks. The key lies in continuous adaptation, ethical implementation, and a focus on long-term behavior change rather than one-time compliance.

Future Directions

As cyber threats continue to grow in complexity, so must the strategies used to defend against them. The integration of human factors into cybersecurity audits is still in its early stages, but emerging technologies and behavioral science advancements offer promising pathways for refinement and scalability. The future of audit frameworks will increasingly rely on dynamic, data-driven, and adaptive approaches to address the ever-evolving human threat vector.

• AI-Powered Behavioral Analytics

Artificial Intelligence (AI) and Machine Learning (ML) can be used to establish behavioral baselines and detect anomalies in user activity. These tools can identify subtle indicators of insider threats, compromised accounts, or social engineering in progress—enhancing both audit accuracy and real-time prevention.

• Gamified Continuous Learning Platforms

To combat awareness fatigue, organizations are investing in gamified security training platforms that adapt based on user performance. These tools make learning interactive and personalized, reinforcing knowledge through realistic simulations, quizzes, and rewards systems.

• Standardization of Human-Centric Metrics

One of the major gaps in current audit processes is the lack of standardized metrics for assessing human vulnerability. Future audit frameworks should develop agreed-upon KPIs such as phishing response time, susceptibility index, training retention rate, and reporting effectiveness.

• Cross-Disciplinary Collaboration

Future audits may involve not just cybersecurity professionals, but also psychologists, educators, and organizational behavior experts. This interdisciplinary approach can lead to more holistic evaluations and effective remediation strategies.

• Proactive Culture Audits

Instead of reactive assessments post-incident, organizations are expected to implement culture audits that periodically measure employee attitudes, trust levels, and behavioral risk perceptions. This proactive insight can guide better training and policy development.

• Adaptive Risk Scoring

Audits of the future will likely use real-time risk scores for users, teams, and departments—factoring in behavior, access level, role sensitivity, and training history. These adaptive models can help prioritize resources and focus on high-risk segments dynamically.

• Ethical Auditing and Privacy-By-Design

As human-centric auditing becomes more data-intensive, ethical considerations will play a larger role. Incorporating privacy-by-design principles and transparent communication will be essential to maintain trust while collecting behavioral insights.

The convergence of behavioral science, advanced analytics, and adaptive learning platforms represents the next evolution in cybersecurity audits. These innovations will empower organizations to not only detect and respond to threats, but to preempt them—through a deeper understanding of the human layer.

Conclusion

As cyber threats become increasingly sophisticated, attackers are focusing less on breaching firewalls and more on exploiting the human mind. Social engineering has proven to be a consistent and highly effective method of attack, targeting individuals’ trust, decision-making, and psychological biases. Despite this, traditional cybersecurity audits often underrepresent the human factor, placing disproportionate emphasis on technical compliance and system hardening.

This paper has highlighted the critical role that human behavior plays in an organization’s security posture. Through the lens of the 7 Vulnerabilities of the Human OS, we explored how real-world incidents—spanning phishing scams, impersonation fraud, and insider manipulation—demonstrate the need for behavioral assessments in audits. Additionally, we presented historical, cinematic, and fictional illustrations to show how timeless and universal these principles are.

To address this pressing gap, we proposed the integration of human-centric tools and methodologies—such as phishing simulations, cultural audits, and AI-powered behavioral analytics—into existing audit frameworks. While challenges such as subjectivity, privacy concerns, and evolving threats remain, the benefits of a more holistic audit approach are undeniable.

Ultimately, strengthening cyber resilience requires acknowledging that people are not just endpoints, but integral parts of the security ecosystem. Embedding human factor evaluation into audit strategies is no longer optional—it is essential. By doing so, organizations can move beyond mere compliance and toward proactive, adaptive, and psychologically-aware cybersecurity governance.